On Monday and Tuesday I was pretty focused on the conference side of the OpenStack Summit, but with all the keynotes behind us, when Wednesday rolled around I found myself much more focused on the Design Summit side.

Our first session of the day was on Community Task Tracking, which we jokingly called the “task tracking bake-off.” As background, couple years ago the OpenStack Infrastructure team placed our bets on an in-project developed task tracker called StoryBoard. The hope had been that the intention to move off of Launchpad and onto this new platform would bring support from companies looking to help with development. Unfortunately this didn’t pan out. Development landed on the shoulders of a single poor, overworked soul. At this point we started looking at the Maniphest component of Phabricator. Simultaneously we ended up with a contributor putting together configuration management for Maniphest and had a team pop up to continue support of StoryBoard for a downstream that had begun using it. A few weeks ago I organized a bug day where the team got together to do a serious once through of outstanding bugs and provide feedback to the StoryBoard team about what we need to use it, we went from 571 active bugs down to 414.

This set the stage for our session. We could stand up a Maniphest server or place our bets with StoryBoard again. We had a lot to consider.

| Pros | Cons | |

| Storyboard | Strong influence over direction, already running and being used in our infra, good API | We need to invest in development ourselves, little support for design/UI folks (though we could run a standalone Pholio) |

| Maniphest | Investment is made by a large exiting development team, feature rich with pluggable components like Pholio for design folks | Little influence over direction (like with Gerrit), still have to stand up and migrate to, weak API |

Both had a few things lacking that we’d need before we go full steam into use by all of OpenStack, so there seemed to be consensus that they were similar in terms of work and time needed to get to that point. Of all the considerations, the need to develop our own vs. depending on upstream is the one that weighed most heavily upon me. Will companies really step up and help with development once we move everyone into production? What happens if our excellent current StoryBoard developers are reassigned to other projects? Having an active upstream certainly is a benefit. The session didn’t end with a formal selection, but we will be discussing it more over the next couple weeks so we can move toward making a recommendation to the Technical Committee (TC). Read-only session etherpad here.

The next session I attended was in the QA track, for the DevStack Roadmap. The session centered around finally making DevStack use Neutron by default. It’s been some time since nova-networking was deprecated, so this switch was a long time in coming. In addition to the technical components of this switch, there documentation needs to be updated around the networking decisions. Since I’ve just recently done some deep dives into OpenStack networking, somehow I ended up volunteering to help with this bit! Read-only session etherpad here.

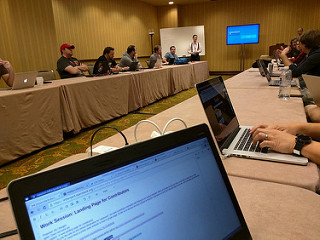

Before the very busy lunch I had coming up, there was one more morning session, on Landing Page for Contributors. The current pages we have on the wiki, like the Main page on the wiki itself and the How To Contribute wiki aren’t the most welcoming of pages, they’re more walls of text that a new contributor has to sift through. This session talked through a lot of the tooling that could be used to make a more inviting, approachable page, drawing from other projects who have forged this path in the past. Of course it is also important that the content is reviewed and maintainable from the project perspective too, so something that can be held in revision control is key. Read-only session etherpad here.

As lunch rolled around I rushed upstairs to assist with the Git and Gerrit – Lunch and Learn. The event began by expecting and separating out about 1/3 of the folks in the room who hadn’t completed the prerequisites. It was the job of myself and the other helpers to start working with these folks to get their accounts set up and git-review installed. This wasn’t a trivial task, in spite of my intimate knowledge of how our system works and years of using it, almost all the attendees used Windows or Mac. I use Linux full time and we don’t maintain good (or any) documentation for in our development workflow for OpenStack development for these other operating systems.

A lot of folks did make it through configuration, and it was nice to be reminded about how our community is growing and that our tools need to do as well. A patch was submitted several months back to add a video of how to set things up on Windows, but that’s inconsistent with the rest of our documentation and has not been accepted. It would be great to see some folks using these other operating systems help us get the written documentation into better shape. Beyond the prerequisites, session leaders Amy Marrich and Tamara Johnston walked folks through setting up their environment, submitting a patch to the sandbox repo, submitting a test bug, reviewing a change and more. The slide deck they used has been uploaded to Amy’s AustinSummit GitHub project. I also took a few minutes to explain the Zuul Status page and a bit about each of the pipelines that a change may go through on the way to being merged.

Directly after lunch I was in another infrastructure session, this time to talk about Launch-Node, Ansible and Puppet. Launching new, long-lived servers in our infrastructure is one of those tasks that has remained frustratingly hands on. This manual work has been a time sink and a lot of it can be automated, so we as a team consider this situation a bug. Our Launch-Node script has been developed to start tackling this and the session went through some of the things we need to be careful of, including handling of DNS and duplicate hostnames (what if we’re spinning up a replacement server?), when do we unmount and disassociate cinder volumes and trove databases with the old server and bring them up on the new? Lots of great discussion around all of this was had. Fixes were already coming in by the end of this session and we have a good path moving forward. Read-only session etherpad here.

The next infrastructure session focused on Wiki upgrades. We’ve been struggling with spam problems for a several months. We need to do an upgrade to get some of the latest anti-spam tooling, which also requires upgrading the operating system in order to get a newer version of PHP. The people-power we have for this is limited, as we all have a lot of other projects on our plates. The session began with outlining what we need to do to get this done, and wound down with the proposal to shut down the wiki in a year. The OpenStack project has great, collaborative tooling for publishing documentation and things, we also use etherpads a lot for notes and to do lists, is there really still an active need for a wiki? Thierry Carrez sent an email today that started work on socializing our options, whether to carry on with the wiki or not. As the discussions continue on list, I hope to help in finding tooling for teams that need it and the current tools don’t satisfy. While we do that over the next year, Paul Belanger has bravely stepped forward to lead up the ongoing maintenance of the wiki until the possible retirement. Read-only session etherpad here.

Thursday morning kicked off bright and early with a session on Proposal jobs. As some quick background, proposal jobs are run on a privileged server in the OpenStack infrastructure that has the credentials to publish to a few places, like translations files up to Zanata. With this in mind, and as general good policy, we like to keep jobs we’re running here down to a minimum, using non-privileged servers as much as possible to complete tasks. The session walked through several of the existing jobs and news ones that were being proposed to sort through how they could be done differently, and make sure we’re all on the same page as a team when it comes to approving new jobs on these servers. Read-only session etherpad here.

It was then on to a session to “Robustify” Ansible-Puppet. Several months back we switched over to a system of triggering Puppet runs with Ansible instead of using the Puppetmaster software. This process quickly became complicated, so much so that even I struggled to trace the whole path of how everything works. Thankfully Monty Taylor and Spencer Krum started off the session by whiteboarding how everything works together, or doesn’t, as the case may be. It was a huge help to see it sketched out so that the pain points could be identified, one of those rare times when it was super valuable to be together in a room as a team rather than trying to explain things over IRC. We learned that inventory creation for Ansible is one of our pain points, but the complexity of the whole system has made fixing problems tricky, you pull one thread and something else gets undone! We also discussed the status of logging, and how we can better prepare for edge cases where things Really Go Wrong and we can’t access to the server to see the logs to find out what happened. There’s also some Puppetboard debugging to do, as folks rely on the data from that and it hasn’t been entirely accurate in reporting failures lately. In all, a great session, read-only session etherpad here.

Next up for Infrastructure was a fishbowl session about OpenID/SSO for Community Systems. The OpenStack Foundation invested in the development of OpenStackID when few other options that fit our need were mature in this space. Today we have the option of using ipsilon, which has a bigger development community and is already in use by another major open source project (Fedora). The session outlined the benefits of consuming an upstream tool instead, including their development model, security considerations and general resources that have been spent to roll our own solution. The session also outlined exactly what our needs are to move all of our authentication away from Launchpad hosted by Canonical. I think it was a good session with some healthy discussion about where we are with our tooling, read-only session etherpad here.

I spent my time after lunch with the translations/internationalization (i18n) folks in a 90 minute work session on Translation Processes and Tools (read-only session etherpad here). My role in this session, along with Steve Kowalik and Andreas Jaeger was to represent the infrastructure team and the tooling we could provide to help the i18n team get their work done. Of particular focus were the translations check site that we need to work toward bringing online and our plan to upgrade Zanata, and the underlying operating system it’s running on. We also discussed some of the other requirements of the team, like automated polling of Active Technical Contributor (ATC) status for translators and improved statistics on Stackalytics for translations. Andreas was also able to take time to show off the new translations procedure for reno-driven release notes, which allows for translations throughout the cycle as they’re committed to the repositories rather than a mad rush to complete them at the end. It was also nice to catch up with Alex Eng from the Zanata team and former i18n PTL Daisy (Ying Chun Guo) who I had such a great time with in Tokyo, I wish I’d had more time to grab a meal with them.

In our final Infrastructure-focused session of the day, we met to discuss operating system upgrades. With the release of the latest Ubuntu LTS (16.04) the week prior to the summit, we find ourselves in a world of three Ubuntu LTS releases in the mix. We decided to first carve out some time to get all of our 12.04 systems upgraded to 14.04. From there we’ll work to get our Puppet modules updated and services prepared for running on 16.04. Of particular interest to me is getting the Zanata server on 16.04 soon so we can upgrade the version of Zanata that it’s running and requires a newer version of Java than 14.04 provides. We also spent a little time splitting out the easier servers to upgrade from the more difficult ones, especially since some systems have very little data and don’t actually need an in place upgrade, we can simply redeploy workers. We will do a more thorough evaluation when we’re closer to upgrade time, which we’re scheduling for some time this month. Read-only session etherpad here.

Thursday evening meant it was time for our Infrastructure Team dinner! Over 20 self-proclaimed infrastructure team members piled into cars to make it across town to Freedmans to enjoy piles of BBQ. I had to pass on all things bready (including beer) but later in the evening we made our way inside to the bar where we found agave tequila that was not forbidden for me. The rest was history. Lots of fun and great chats with everyone, including a bunch of non-infra people who had been clued into our late night shenanigans and decided to join us.

Friday was our day for team work session gatherings. Infrastructure ended up in room 404 (which, in fact, was difficult to find). Jeremy Stanley (fungi) kicked the day off by outlining topics for Infra and QA that we may find valuable to work on together while we were in the room. I worked on a few things with folks for about an hour before switching tracks to join my translations friends again over in their work session.

Steve, Andreas and I made our way over to the i18n session to chat with them about the ability to translate more things (like DevStack documentation) and to give them an update from our upgrades session for an idea of when they could expect the Zanata upgrade. Perhaps the most exciting part of the morning was their request for us to finally shut down the OpenStack Transifex project. We switched to Zanata when Transifex went closed source, but our hosted account has lingered around for a year since we’ve used it “just in case” we needed something from it. With two OpenStack cycles on Zanata behind us, it was time to shut it down. We were all delighted when we saw the email: [Transifex] The organization OpenStack has been deleted by the user jaegerandi.

After one more lunch at Cooper’s BBQ, I made it back to the Infrastructure room for more afternoon work, but I could feel the cloud of exhaustion hitting me by then. Most of what I managed was informally chatting with my fellow contributors and sketching out work to be done rather than actually getting much done. There’d be plenty of time for that once I returned home!

I concluded my time in Austin with a few colleagues with a visit to the Austin Toy Museum, some leisurely time at the Blue Cat Cafe (my first cat cafe!) and a quiet sushi dinner. With that, another great OpenStack Summit was behind me. My flight home left at 6AM Saturday morning.

Edit: Infrastructure PTL Jeremy Stanley has also written summaries of sessions here: Newton Summit Infra Sessions Recap