Today, Tuesday here in Hong Kong, the OpenStack Summit began!

It kicked off with a beautiful and colorful performance of the Lion dance, followed by some words of welcome from Daniel Lai, CIO of the Hong Kong Office of the Government (video of both here).

Then we had the keynotes. Jonathan Bryce, Executive Director of the OpenStack Foundation, began with an introduction to the event, announcing that there were over 3000 attendees from 50 countries and satisfying the curiosity of attendees by announcing that the next summit would take place in Atlanta, and the one following that in Paris! He then welcomed a series of presenters from Shutterstock, DigitalFilm Tree and Concur to each talk about the way that they use OpenStack components in their companies (video here). These were followed up by a great keynote by Mark Shuttleworth, founder of Canonical and Ubuntu (video here), and a keynote from IBM (video here).

Directly following the keynotes the Design Summit sessions began. I spent much of my day in TripleO sessions.

– TripleO: Icehouse scaling design & deployment scaling/topologies –

This session included a couple of blueprints, starting off with one discussing the scaling design of the current TripleO. What is not automated (tailing of log files, etc), what is automated but slow (bootstrapping, avoidance of race conditions, etc), where we hit scaling/perf limits (network, disk i/o, database, etc), measuring and tracking tools (measure latency, collectd+graphite on undercloud, logstash+elastic search in undercloud). From there the the second half of the session discussed the needs and possibilities regarding a Heat template repository for Tuskar.

Copy of notes from this session available here: tripleo-icehouse-scaling-design.txt and tripleo-deployment-scaling-topologies.txt

– TripleO: HA/production configuration –

This session provided a venue for reviewing the components of TripleO and determining what needs to be HA, including: rabbit, qpid, db, api instances, glance store, heat-engine, neutron, nova-compute & scheduler & conductor, cinder-volume, horizon. Once defined, attendees were able to discuss the targeted solution for HA for each component which were captured in the session notes.

Copy of notes from this session available here: tripleo-icehouse-ha-production-configuration.txt

– TripleO: stable branch support and updates futures –

The discussion during this session centered around whether the TripleO project should maintain stable branches so TripleO can be deployed using non-trunk OpenStack and what components would need to be attended to to make this happen. Consensus seemed to be that this should be a goal with a support term similar to the rest of OpenStack, but more discussions will come when the project itself has grown up a bit.

Copy of notes from this session available here: icehouse-updates-stablebranches.txt

– TripleO: CI and CD automation –

This was the last TripleO session I attended. It began with an offer from Red Hat to provide a second physical cluster to complement the current HP rack that we’re using for TripleO testing. Consensus was that this new rack would be identical to the current one in case one of the providers has problems or goes away, and it was noted that having multiple “TripleO Clouds” was essential for gating. Discussion then went into what should be running test-wise and timelines for when we expect each step to be done. Then Robert Collins did a quick walkthrough of tripleo-test-cluster document that steps through our plans for putting TripleO into the Infrastructure CI system. This is my current focus in TripleO and I have a lot of work to do when I get home!

Copy of notes from this session available here: icehouse-tripleo-deployment-ci-and-cd-automation.txt

– Publishing translated documentation –

I headed over to this session due to my curiosity regarding how translations are handled and the OpenStack Infrastructure’s role in making sure the translations teams have the resources they need. The focus of this session was the formalization of getting translations published on official OpenStack docs page and when these should be published (only when translations reach 100%? If so, should a partially translated “in progress” version be published on a dev server?). There were some Infrastructure pain points that Clark Boylan was able to work with them on after the session.

Copy of notes from this session available here: icehouse-doc-translation.txt

– Infrastructure: Storyboard – Basic concepts and next steps –

Thierry Carrez led this session about Storyboard, a bug and task tracking Django application that he created a Proof of Concept for to replace Launchpad.net, which we currently use. He did a thorough review of the current limitations of Launchpad as justification for replacing it, and quickly mentioned the pool of other bug trackers that were reviewed. From there he broke into a workflow discussion in Storyboard and wrapped up the session by outlining some priorities to move it forward. I’m really excited about this project, while it’s quite the cliche to write your own bug and task tracker and I find Launchpad to be a pretty good bug tracker (as these things go), the pain points of slowness and lack of active feature development will only continue to get worse as time goes on and working on a solution now is important.

Copy of notes from this session available here: icehouse-summit-storyboard-basic-concepts-and-next.txt

– Infrastructure: External replications and hooks –

Last session of the day! The point of this session was to discuss our git replication strategy, and specifically our current policy of mirroring our repositories to GitHub. Concerns centered around casual developers getting confused or put off by our mirror there (not realizing that it’s just a mirror, not something you can do pull requests against), the benefits of discoverability and workflow for contributors used to GitHub even if they have to use Gerrit for actual submission of code, the implicit “blessing” of GitHub our repository mirrors there conveys (we don’t mirror to other 3rd party services, is this fair?) and the ingrained use of the GitHub URLs by many projects. The most practical concern with this replication was the amount of work it adds for the Infrastructure team when creating new projects if GitHub is misbehaving.

Consensus was to keep the GitHub mirrors, but to work more to replace references to cloning, etc repos to point to our new (as of August) git://git.openstack.org and https://git.openstack.org/cgit/ addresses.

Copy of notes from this session available here: icehouse-summit-external-replications-and-hooks.txt

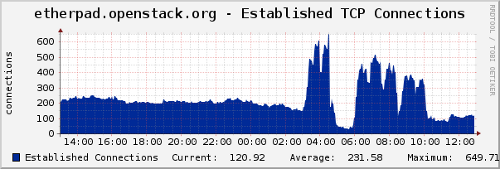

And before I wrap up, Etherpad held up today! Clark did work this past month to deploy a new instance that closed several bugs related to our past deployment. Throughout sessions today we kept an eye on how it was doing, the graph from Cacti very clearly showed when sessions were happening (and when we were having lunch!):