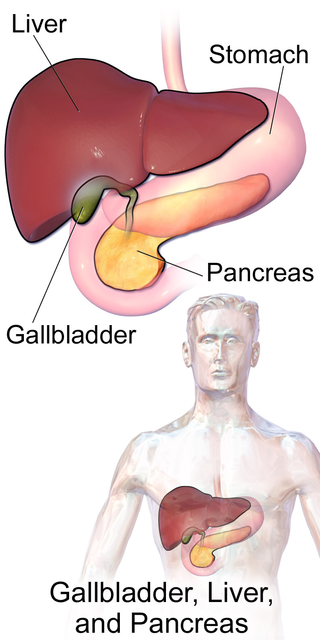

During the most painful phase of the recovery from my gallbladder removal I was able to do a whole lot. Short walks around the condo to relieve stiffness and bloating post-surgery, but mostly I was resting to encourage healing. Sitting up hurt, so I spent a lot of time in bed. But what to do? So bored! I ended up reading a lot.

I don’t often write about what I’ve been reading, but I typically have 6 or so books going of various genres, usually one or two about history and/or science, a self improvement type of book (improving speaking, time/project management), readable tech (not reference), scifi/fantasy, fiction (usually cheesy/easy read, see Ian Fleming below!), social justice. This is largely reflected in what I read this past week, but for some reason I’ve been slanted toward history more than scifi/fantasy lately.

Surviving Justice: America’s Wrongfully Convicted and Exonerated edited by Dave Eggers and Lola Vollen. I think I heard about this book from a podcast since I’ve had a recent increase in interest in capital punishment following the narrowly defeated Prop 34 in 2012 seeking to end capital punishment in California. I’ve long been against capital punishment for a variety of reasons, and the real faces that this book put on wrongfully accused people (some of whom were on death row) really solidified some of my feelings around it. The book is made up of interviews from several exonerated individuals from all walks of life and gives a sad view into how their convictions ruined their lives and the painful process they went through to finally prove their innocence. Highly recommended.

Siddhartha by Hermann Hesse. I read this book in high school, and it interested me then but I always wanted to get back and read it as an adult with my perspectives now. It was a real pleasure, and much shorter than I remembered!

Casino Royale, by Ian Fleming. One of my father’s guilty pleasures was reading Ian Fleming books. Unfortunately his copies have been lost over the years, so when I started looking for my latest paperback indulgence I loaded up my Nook to start diving in. Fleming’s opinion and handling of women in his books is pretty dreadful, but once I put aside that part of my brain and just enjoyed it I found it to be a lot of fun.

The foundation for an open source city by Jason Hibbets. I saw Hibbets speak at Scale12x this year and downloaded the epub version of this book then. He hails from Raleigh, NC where over the past several years he’s been working in the community there to make the city an “Open Source City” – defined by one which not only uses open source tools, but also has an open source philosophy for civic engagement, from ordinary citizen to the highest level of government. The book goes through a series of projects they’ve done in Raleigh, as well as expanding to experiences that he’s had with other cities around the country, giving advice for how other communities can accomplish the same.

Orla’s Code by Fiona Pearse. This book tells of the life and work of Orla, a computer programmer in London. Having subject matter in a fiction book about a women and which is near to my own profession was particularly enjoyable to me!

Book of Ages: The Life and Opinions of Jane Franklin by Jill Lepore. I heard about this book through another podcast, and as a big Ben Franklin fan I was eager to learn more about his sister! I loved how Lepore wove in pieces of Ben Franklin’s life with that of his sister and the historical context in which they were living. She also worked to give the unedited excerpts from Jane’s letters, even if she had to then spend a paragraph explaining the meaning and context due to Jane’s poor written skills. Having the book presented in this way gave an extra depth of understanding Jane’s level of education and subsequent hardships, while keeping it a very enjoyable, if often sad, read.

Freedom Rider Diary: Smuggled Notes from Parchman Prison by Carol Ruth Silver. I didn’t intend to read two books related to prisons while I was laid up (as I routinely tell my friends “I don’t like prison shows”), but I was eager to read this one because I’ve had the pleasure of working with Carol Ruth Silver on some OLPC-SF stuff and she’s been a real inspiration to me. The book covers Silver’s time as a Freedom Rider in the south in 1961 and the 40 days she spent in jail and prison with fellow Freedom Riders resisting bail. She was able to take shorthand-style notes on whatever paper she could find and then type them up following her experience, so now 50 years later they are available for this book. The journal style of this book really pulled me in to this foreign world of the Civil Rights movement which I’m otherwise inclined to feel was somehow very distant and backwards. It was also exceptionally inspiring to read how these young men and women traveled for these rides and put their bodies on the line for a cause that many argued “wasn’t their problem” at all. The Afterward by Cherie A. Gaines was also wonderful.

Those were the books I finished, but I also I put a pretty large dent in the following:

- Betty Zane by Zane Grey

- Live and let die by Ian Fleming

- The Remedy by Thomas Goetz

- The Mental Floss History of the United States by Erik Sass, Will Pearson, Mangesh Hattikudur

- Switch by Chip and Dan Heath

- The Articulate Advocate by Johnson Hunter

All of these are great so far!