Back in 2010 I attended the Open Source Business Conference for the first time. It was only a few months after moving to San Francisco, and that and the Linux Foundation Collaboration Summit were my first exposure to major companies coming to conferences to get serious about open source adoption. It was a really inspiring event, as a passionate advocate of open source for years, watching it go mainstream has been a big deal for me personally (and, it turns out, my career).

The conference has since been rebranded the Open Business Conference, where they are focusing all all kinds of open, from open infrastructure planning to open data. Even better, I was excited to see that so many major companies are now not only advocating use of open source software, but are now employing programmers and engineers like myself to contribute directly to the open source projects they are using.

It was held at The Palace Hotel, which I can see from my bedroom window. Ostensibly I was attending as a local to help staff the HP booth, where I happily joined Jeanne and another local representative from the printer team at HP. But I was fortunate that the conference closed the booth areas during talks and booth staff were encouraged to attend the keynotes and sessions, hooray!

The first keynote from Matt Asay of MongoDB was slightly more toned down than the “we have made it!” excitement of the event in 2010. His message was that while open source can be called mainstream at this point, we have not yet saturated the industry and there are key spaces where open source still isn’t doing a great job of competing with proprietary vendors in the enterprise.

It was great to hear from Dr. Sanjiva Weerawarana, Founder, Chairman & CEO and WSO2 about their commitment to open source in their middleware product offerings. I also enjoyed hearing from Dr. Ibrahim Haddad of Samsung that they’ve launched the OSS Group to work toward becoming more of a leader in the open source world. Both of these companies were showing dedication to the open source ecosystem for similar reasons centered around their own products depending upon it, a faster path to innovation (starting from solid, open source core) and that the proverbial writing is on the wall when it comes to companies pushing back against vendor lock in and running vital business functions on too much proprietary code.

There were also a couple OpenStack related keynotes from Alan Clark of the OpenStack Foundation and Bill Hilf of HP Cloud. Clark echoed some of the business reasons for choosing open source covered by others, and specifically cited the success of OpenStack in an ecosystem of multiple vendors collaborating under a foundation, rather than a central company driving development. Hilf of HP focused more on defining hybrid clouds in the complex enterprise networks of today where customers can’t easily fit into defined boxes for solutions.

Other highlights the first day included a talk by Donnie Berkholz where he talked about the current divide in DevOps between communities that come from an Operations background and those that come from a Development background. Ops folks tend to be focusing on configuration management where devs are more interested in APIs, SDKs and Continuous Integration. Both communities could benefit from working more closely together to merge their efforts and even their conferences to a more solidified DevOps movement. I also enjoyed Svetlana Sicular’s talk on “the Internet of data” where she spoke about some of the current challenges that organizations and our society as a whole have with big data. There is a considerable amount of data out there that could be doing everything from solving small problems to saving lives, if we can just learn how to appropriately (and safely) share and process it all.

It was also great to hear from Intel and Dish Network on the Open Source work they’ve been doing. I also enjoyed much of the talk from Sanjib Sahoo of tradeMONSTER about their use of open source, but was pretty disappointed that they seem to almost actively choose not to contribute features back to the open source projects they use. I find the excuse that “open source development is not our business” to be wearing pretty thin these days when you see companies like Samsung and HP making such major efforts.

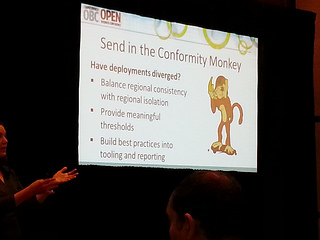

After the opening keynotes, I think my favorite presentation was by Dianne Marsh of Netflix talking about the Continuous Delivery system and “monkeys” that they have in production to test the resiliency, conformity and more in their infrastructure and applications. This work is cool enough and made it a valuable session for me, but what made it noteworthy was that key portions of this infrastructure are open source at: netflix.github.io. Awesome!

The last two talks I went to were related once again to OpenStack, first Alex Freedland of Mirantis whose points about the current open source ecosystem were very valuable, most notably that innovation is now happening in the open source space rather than attempting to play catch up by offering open source alternatives to proprietary solutions. The second was by Andrew Clay Shafer whose talk was related to a blog post from last November about some of the weaknesses of OpenStack. While I don’t agree with all of his points, his slides are here and get to his specific critiques around slide 24. He also offered some suggestions on how to improve development, most notably by increasing the focus on OpenStack users, particularly smaller organizations who currently struggle with it.

In all, it’s definitely a more business-centric conference than I’m used to attending (which is by design) but I found a lot of value in many of the sessions even from a technical perspective.

More photos from the conference available here: https://www.flickr.com/photos/pleia2/sets/72157644220587259/